Bitsight is moving fast, but we don’t want to sacrifice code quality for speed, which is why tests have always played an important role in our development process. Although we are not doing TDD (Test-driven development), one of the key requirements for doing test heavy development is that the full test suite should be fast. If running all tests takes less than 5 minutes, developers are more likely to run them frequently and keep adding more tests. However, Bitsight's portal application is a bit of a monolith and takes longer than we would like to run test suites.

The Problem

This huge yet important monolith of ours was created over years. We are actively working on breaking it apart. At the same time, we can’t stop making changes to it, and we need to be confident when we do. Fortunately, we have accumulated over 5000 tests of all types — unit tests, integration tests, and user action simulation tests. Some of our recent test runs took up to 3 hours and 26 minutes to complete. Even worse, running a single test took almost an hour. It has finally reached a point that it’s unacceptable, so we decided to take action.

A closer look

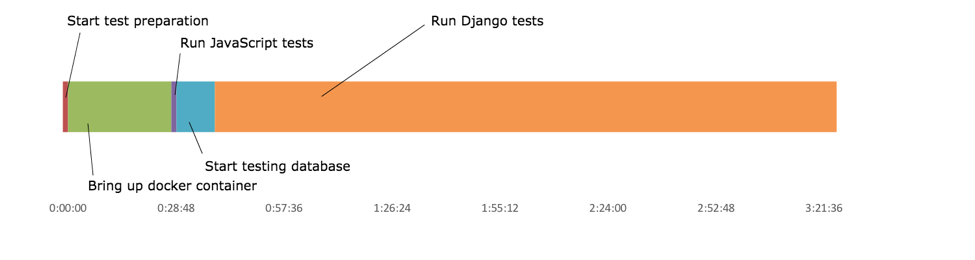

In order to solve this problem, we needed to understand the problem better. Here’s a breakdown of the test run, which initially took 3.5 hours:

As we can see from the graph, three steps were taking significantly more time than they should: setting up the docker container, starting the testing database, and, of course, the actual test running step. Let’s review them one by one.

Docker Container Setup

Docker is known for being lightweight and agile; so why was it taking around 30 minutes to bring up the container? It turns out we hadn’t been maintaining our docker image at all. Our current image still provided the same environment from when we created it. In order to still be able to run tests, a lot of setup steps, including installing missing python packages, installing node js packages, and building static assets, were introduced as an initializing step of the container every time.

Bitsight’s portal is a python-based application, so we should include and only include the necessary python environment when we build the image, and rebuild it whenever there’s an environment change. Building static assets doesn’t seem to be a reasonable thing to do inside this python image. It should be done in a much more lightweight plain js node container. This should reduce the static assets building time slightly, and the python container setup time a lot.

Django Testing Database Setup

Django is the web framework we use to build Bitsight portal. In this step, the framework initializes the database for running tests. In order to provide consistent testing data we were creating an empty database and loading a pre-defined data fixture. While none of these steps may sound heavy, it takes around 10 minutes in total.

After several testing runs, it caught our attention that when executing a “Rendering models…” step while Django applies migrations, the process would hang there for 5 minutes, and then start applying migrations. A little research unearthed that luck really isn’t on our side — this is a bug in Django. The bug was introduced in an older version, and has been fixed in a later version. Since upgrading the major framework for a legacy monolith wasn’t an option for a quick fix, we had to find another way around.

Django runs migrations on testing databases so that if there’s an initial state of the database, it could be migrated to the latest state. However, we did not have any initial state. We started from an empty database, and loaded data into it after it had all the tables and columns. It is known that when running tests, if Django can’t find any migrations, it’ll perform an operation previously known as “syncdb”, which maps the model state from the code directly to the database. All we needed to do was to trick Django into thinking that there weren’t any migrations. Inspired by this snippet, we managed to apply the snippet to only our test environment. It not only removed the entire “Rendering models” block, but also saved the time it took to actually apply migrations. This step now takes less than a minute.

Running the Tests

Already at this point, we’d managed to reduce the preparation time down to 9 minutes, of which building static assets took the most time . That’s pretty much the best we could do there. Our attention now turned to the test running phase. Running tests doesn’t take much resources, and they are totally independent of each other. Running them in parallel makes perfect sense. In fact Django supports that natively now, unfortunately, we aren’t running this version yet. For the same reason mentioned before, we had to find another way around again.

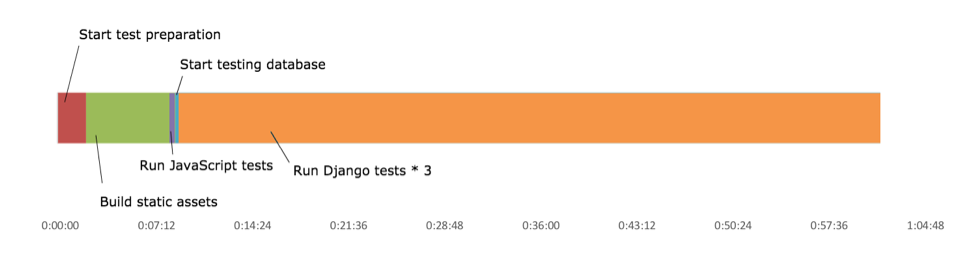

Previously, when we ran tests in different containers, spinning up the containers took almost 30 minutes, so running tests in parallel didn’t make much of a difference. However, with the optimizations done to the docker image, bringing the container up now takes no time and very few resources. After some experimenting , we decided to go with 3 containers, and start them up with a slight time difference so they won’t compete for CPU and disk IO on initialization. Without too much trouble, we have managed to finish the test run and collect results together at the end, and the test running time has now been reduced to 52 minutes. Here’s our new timeline:

End Result

So far, we’ve done following major optimizations:

- Dependencies are not all pre-installed when building the docker image

- Django migrations are not run when setting up the test database

- Django tests are now running in parallel

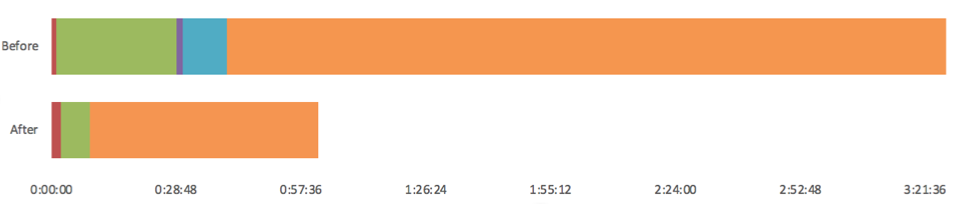

With all these changes, we’ve managed to reduce the total test time from more than 3 hours down to 1 hour. If we put the two timelines together, it’s easy to see the big improvement:

In addition to that, we have sped up local development, enabled the possibility of running the portal in our Kubernetes infrastructure, and last but not least, we can continue to ship things fast!