Ransomware, breach sharing, stealer logs, credentials, and cards. What has shifted and how to respond.

Behind the Screen: The Peril of Neglecting Mobile Apps

Introduction

Everyone knows that running outdated computer applications comes with annoyances and risks. An outdated application might face performance issues or just become slower than modern versions. It might face compatibility issues and have limited functionalities. It might lose technical support and even fail to meet current industry standards and regulations, which could put your organization at risk for non-compliance penalties and legal action. But from all risks an outdated application can bring, one of the most concerning is security: outdated software poses a security risk due to potential or effective security flaws that could be exploited by attackers to gain unauthorized access.

What about applications that run on smartphones? Are they any different from an application that runs on a computer in terms of security risks? Well… yes and no.

As our smartphones grow old, they tend to get cluttered with unused applications. Those applications, and even system applications, can become silently obsolete. Read on to learn more about how such issues led to a massive compromise of millions of smartphones worldwide.

Same difference

It is fairly easy to draw parallels between mobile applications and computer software. As modern operating systems support a greater range of hardware platforms, sometimes it gets harder to even understand the differences. Is it a tablet or a laptop? Or both? In this article, we will refer to a mobile application as the common ‘app’ that runs in our Android/iOS smartphone.

Yes, outdated smartphones mostly share the same problems that outdated computers have. What makes them a bit different is how the user interacts with the device, how the app's life cycle usually works and how the manufacturers and service providers push their software into our devices.

It is common for users to install and test several applications or games that eventually get uninstalled. Eventually. The ease of the install process through digital stores invites experimentation and the user can choose between a myriad of applications that solve a particular problem before settling on one. But time goes by and, more often than not, one tends to forget to uninstall the other apps that were tried and are no longer used. I personally admit to keeping some old apps just in case I would need them again.

Many apps are ‘free’, but neither free as in ‘free speech’ nor as in ‘free beer’. Like Tristan Harris, a former Google design ethicist, describes: “if you’re not paying for the product, you are the product”. So with the ‘free’ apps usually come a trove of third party ad networks, usage telemetry and other functionalities that the user didn’t really want. But the user is willing to pay the price of ‘watching a couple of ads’. Ad networks and telemetry SDKs (Software Development Kits) are sets of tools and libraries that software developers use to integrate various services into their applications. Ad network SDKs allow developers to easily integrate advertising platforms into their apps. These platforms provide a way for app owners to monetize their content by displaying ads to users. Telemetry integration SDKs allow developers to collect data about user behavior within their apps. This data can be used to improve the overall user experience, optimize app performance, and identify areas for improvement. Both ad network and telemetry integration SDKs are important for mobile app development because they help developers monetize their apps and improve the overall user experience. All these things can also be shipped in ‘regular’ computer software but are more common in smartphone apps.

But these apps get old. The used SDKs for ad networks and telemetry integrations get obsolete. Call back endpoints evolve or switch to new technologies. All those components can get deprecated and at different points in time.

The usual app lifecycle also simplifies the process of waking up and sending telemetry back to the application servers. Many mobile apps run for a period of time far beyond its interaction with the user, either because they start processes on system boot or just because they are not really closed when the user switches to another application or they get started on some external event. The point is, it is fairly common that they can be running and the user doesn’t even notice.

And then there are the numerous system apps, custom packages and third party components that come pre installed by the mobile smartphone vendor. They too are subject to deprecation and they are especially dangerous given the usual permissions a system application has.

So, yes, mobile applications are similar to computer software, but they have some interesting nuances, both in the way they work and in the way we use them. In this blogpost, we will explore what happens when a mobile app gets deprecated and its API (Application Programming Interface - these are a set of “rendezvous” calls programmers use to interact with applications) endpoints are left in disuse. And how catastrophic that might end up being.

But before we go any further, I would like to introduce a concept for the readers that might not be familiar with: sinkholes.

Sinkholes

DNS sinkholing involves redirecting a domain name to a controlled infrastructure. One of the ways to achieve this is to simply register expired domain names. It is common for deprecated apps to continue to try to communicate with servers for which their domain names have long expired. Depending on the purpose of the system that has been sinkholed, there can be several benefits:

- Research: Setting up a DNS sinkhole can provide valuable data for cybersecurity researchers studying malicious behavior online. This information can be used to improve security measures, prevent future attacks, increase fingerprinting abilities, extract malware configurations, among others.

- Infection detection: If an IP tries to access a DNS sinkhole that belongs to a known C2 infrastructure, it is likely that it is infected with the respective malware.

- Telemetry abuse detection: by analyzing traffic, it is possible to detect cases where applications were sending telemetry to its servers that is far beyond what is acceptable.

- Botnet takedown: Some botnets might be successfully taken offline by controlling the communication between the infected machines and the sinkholed server.

These techniques can, of course, be also used by attackers to control legitimate infrastructure. Subdomain takeover, a well known attack, is a form of sinkholing that keeps claiming high profile victims, such as Microsoft, Uber, Slack, United Airlines, just to name a few. Additionally, many companies fail to renew domain names they register to aid in their internal services discovery. Registration of these domain names prevents malicious actions by bad actors.

When registering domains with the purpose of sinkholing, knowing exactly what to register is both the challenge and the key to a successful monitoring operation. At Bitsight, we monitor malware at an Internet wide scale, and we have developed several methods to assist with this complex process. Although this is a very interesting topic, it is not the scope of the current article.

Our infrastructure

To give the reader a glimpse of our operation and what we mean when we say that we monitor malware at Internet wide scale, let me present some statistics.

In one of our sinkholing infrastructure (yes, we have more than one), we log and monitor:

- ~ 3 Billion network events per day

- ~ 730 Million deduplicated and aggregated events per day

- ~ 30 Million unique IPv4 and IPv6 addresses per day

- ~ 100 Thousand domain names

It is fair to say that it is a big operation and infrastructure, and it is growing every single day. The sheer volume of traffic that needs to be researched, analyzed, dissected, classified and attributed can get quite intimidating.

Case study

So far we talked about outdated mobile apps and sinkholes in theory. So what about in practice? What happens when a widely used app gets deprecated and someone just happens to sinkhole a domain that it is used by the app? Well, that will depend on who that someone is.

It is fairly common for Bitsight to register domains that somehow have been flagged associated with malware or shady apps. In this case study, we will talk about one particular domain and our findings around it.

We notice an unusually high number of requests into one of our sinkholed domains, mobilesystemservice[.]com, and decide to analyze its traffic patterns and content to better understand what it was. By high, I mean over twelve million (12.000.000) contacts per day, both coming from IPv4 and IPv6.

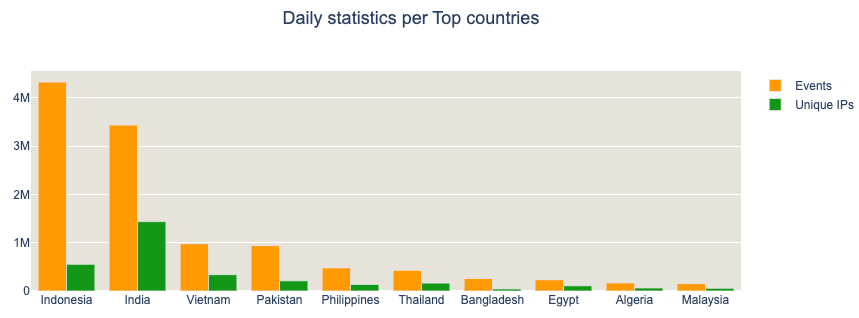

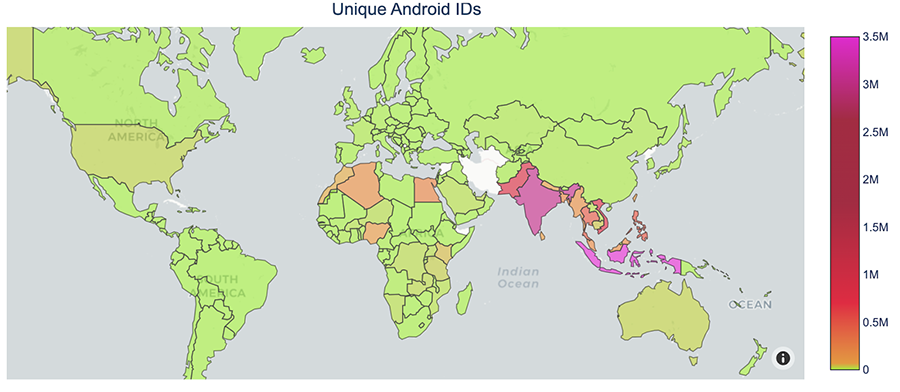

That’s quite significant, to say the least. The origin IPs are mostly focused on APAC and Africa. It is Interesting to note that Indonesia has a higher unique IPs to events relation than other countries.

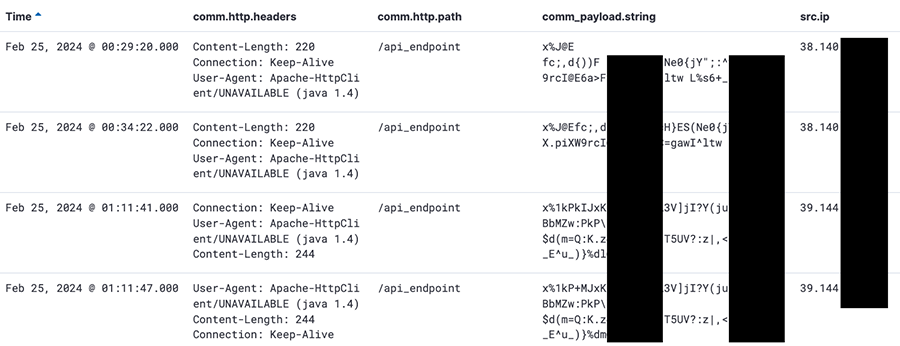

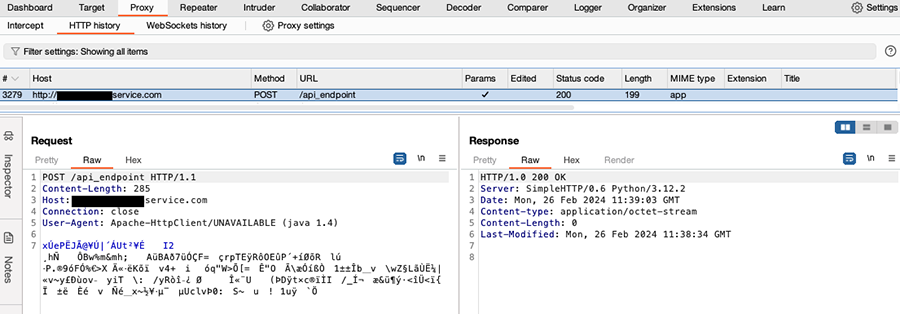

The requests were vastly HTTP POST requests with a binary payload to the endpoint: /api_endpoint . Looking at the POST data, the payload format was not immediately obvious. The headers do not specify the content type either. Here’s some chosen examples coming from our sinkhole:

So we are getting this data in vast numbers, but what could we tell about the domain itself?

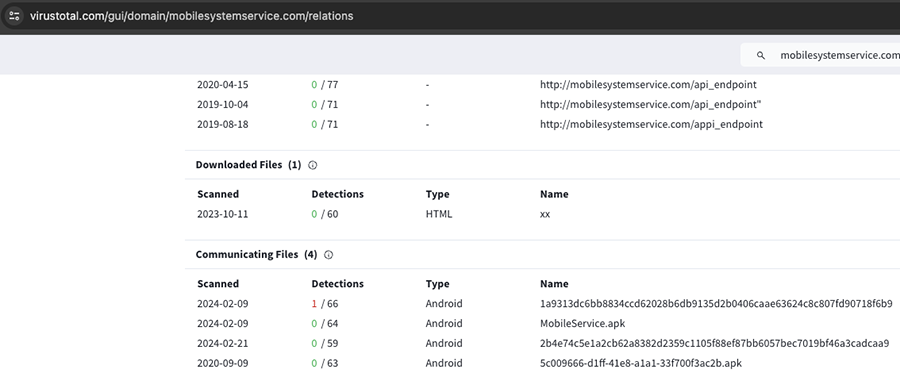

A VirusTotal search showed that the domain is being used by some Android applications. That is good news because an Android application would provide the information we need to understand the payload.

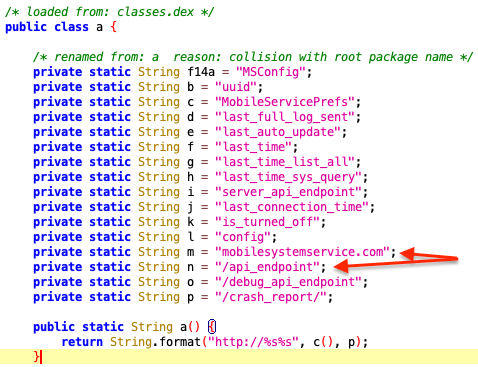

By downloading one of the APKs and analyzing it, we could quickly confirm that it contains the code responsible for the POST calls, with the hardcoded endpoints.

Indeed, there is the domain and the most called endpoint (/api_endpoint) we were looking for, inside the mobile app. That means it's about time to spin up an Android emulator, point it to our favorite intercepting proxy, confirm we can get the payloads and start dissecting. After all, it is not even using HTTPS, just plain old unencrypted HTTP.

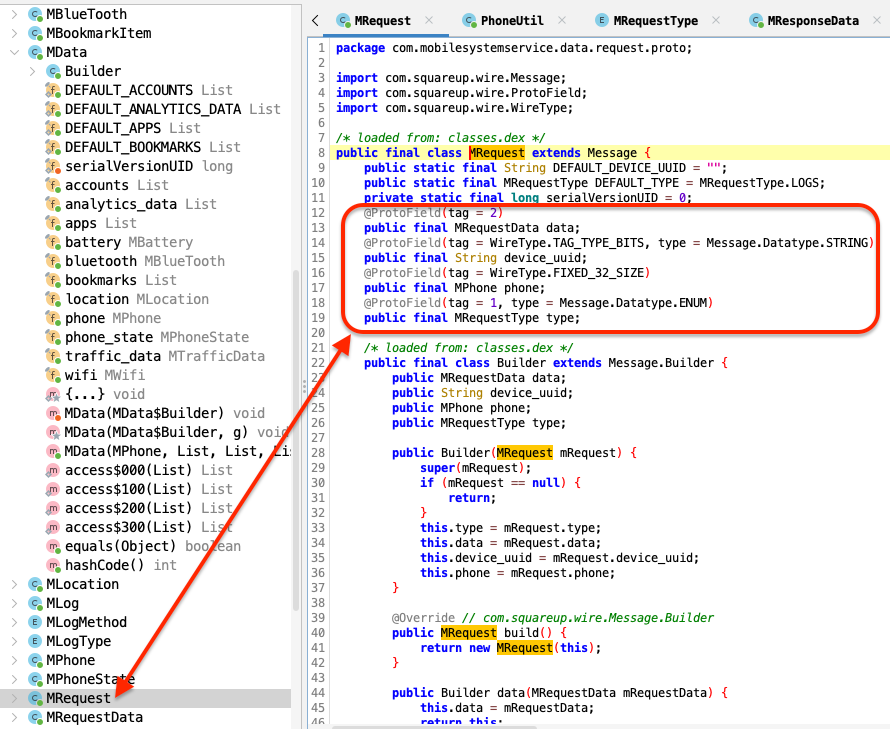

Looking at the libraries used by the APK, we can see that the class that implements the requests has some @ProtoField annotations, as well as the presence of the squareup.wire package. That hints to the use of Wire Protobuf. The Protocol Buffers, also known as Protobuf, schema language and binary encoding are both defined by Google. Wire is an independent implementation from Square that’s specifically designed for Android and Java.

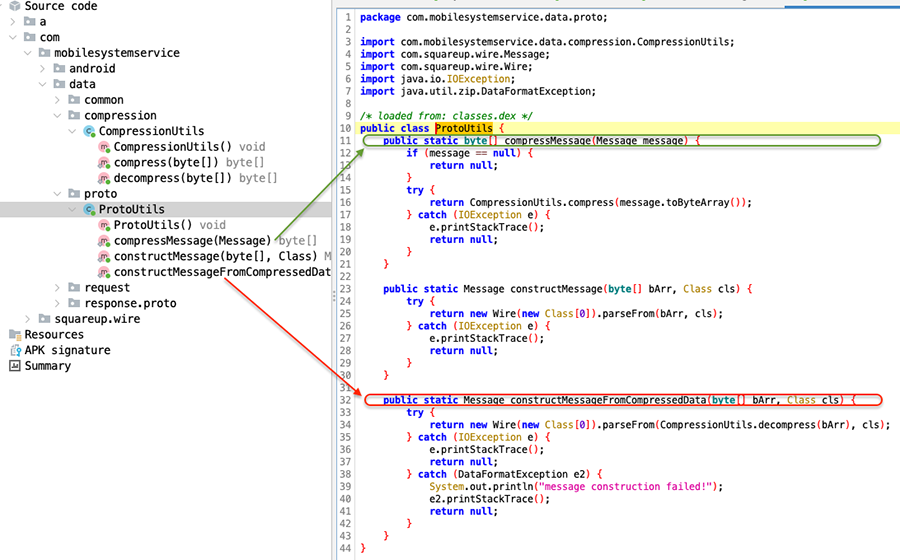

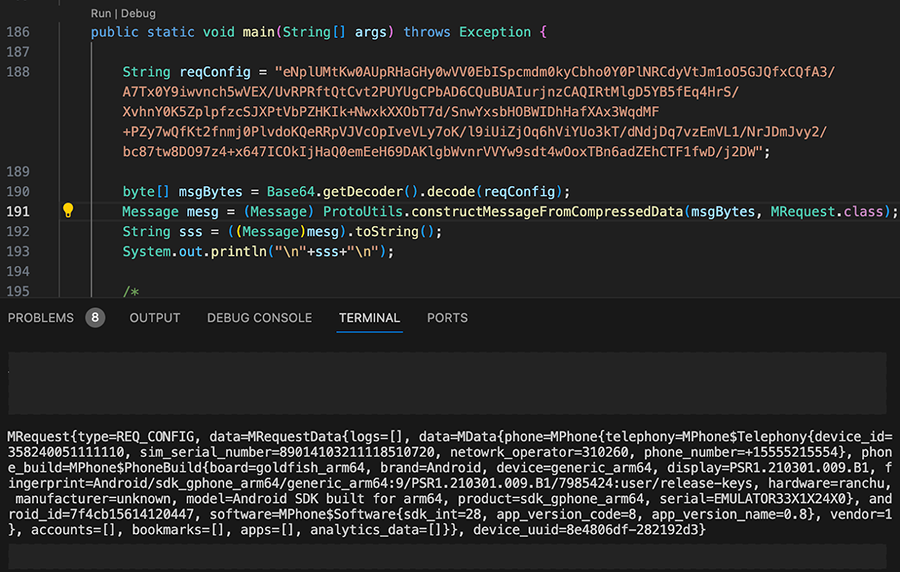

A MRequest object has a type, a device_uuid, phone information (more on this later) and some arbitrary data. But the payload itself is not straightforward Protobuf. It undergoes a final compression step before it gets sent to the server. Same as for reading, it is first inflated then parsed as Protobuf. That is what the ProtoUtils class is for. In the image below, the method highlighted red is used to decode the data coming from the server, while the one highlighted in green is used to compress the Protobuf payload before it gets sent.

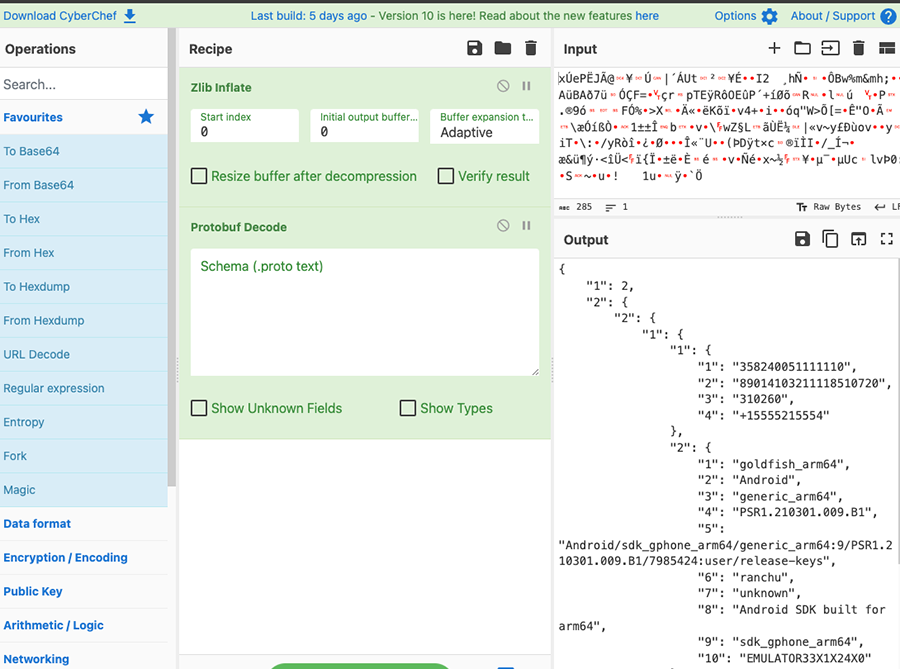

Knowing the data format we can now validate it via a quick confirmation through external tools, like Cyberchef, which allows us to actually see that the decoding process is working. The recipe, after grabbing the POST request payload, is twofold: ZLib inflate and then Protobuf decode.

We can see some field values that make sense, coming from our test device, but we can’t see any field names. Decoding a Protobuf, due to the very nature of the protocol, highly optimized for space, yields a somewhat incomplete output without knowing its schema definition. To have a proper output with all the field names and values we can try to extract the Protobuf schema from the java classes. After trying a couple of readily available tools, there was none that achieved a satisfactory result.

Another option is to use the actual methods inside the APK to decode the messages. dex2jar helps with the task of transforming the APK into a JAR file, which can then be imported into a normal Java project. Decoding then becomes trivial.

The below example shows the payload encoded in Base64 and stored as a String, since it is a convenient way to demonstrate the decoding in a couple of lines in Java.

If you have looked into the class name a couple of images ago and/or are paying attention to the decoded message and values, it is likely that you have an eyebrow up… or two.

So what exactly is the app doing and what is it used for?

Telemetry at scale

Analyzing the app and its behavior allowed us to understand what it was doing and it is pretty appalling. This is an application that can log and upload, to a central server, the following information about a user and its smartphone:

| Category | Detail |

|---|---|

| Accounts | Name, Type |

| Apps | App title, Package name |

| Battery | Voltage, Health, Temperature, Charging status |

| Bluetooth | Paired devices UUIDs, Names, Bond state, Status |

| Bookmarks | Title, URL |

| Browsing History | Title, URL |

| Location | GPS coordinates (Longitude, Latitude, Altitude, Bearing, Accuracy) |

| Phone | Phone Number, SIM Card number, Network operator, Phone State |

| Device Info | Android ID, Device Serial, Hardware Fingerprint, Brand/Model/Product/Manufacturer |

| Traffic Data | RX/TX statistics |

| WIFI | IP address, MAC address, SSID / BSSID, RSSI / Link speed |

It can also install APKs in the device via its update procedure (download and install), which can be forced by the server.

This app is effectively logging so much personal data that it is almost impossible to understand the need for a non malicious app to use it. But if you think this is bad, here’s the kicker: this app can’t be installed from the Play Store nor is it trivial to uninstall. It doesn’t even have an icon you can click on, it's more of a component or library than an actual application, like an OEM component. Either it is dropped from another app somehow, which is sketchy behavior already, or comes preinstalled with the device. It also seems to be highly focused on a particular brand and model of devices.

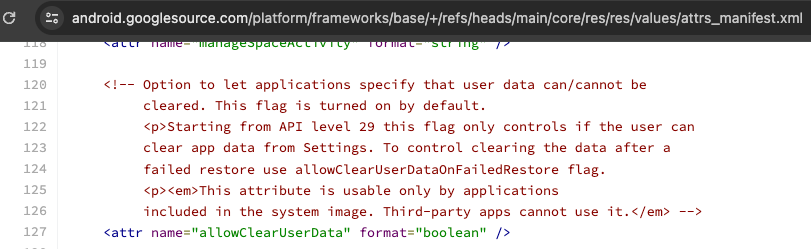

To further substantiate the OEM component claim, there are two additional details. The package name under which this ‘app’ gets installed is: com.android.oem. In addition, the application manifest uses a particular attribute called allowClearUserData. This attribute is interesting because, as the Android Framework source code states, it can only be used “by applications included in the system image. Third-party apps cannot use it.”

Think about this for a second. It seems like a set of devices from a specific vendor is being sold in mostly APAC/Africa countries, with pre-installed software that is able to heavily monitor user behavior. Where that happens in the supply chain before it reaches the end user, we don’t know. It does seem to be an OEM application. We do know it is an app that uses all sorts of intrusive permissions.

It seems part of some important operation. Yet… its domain was left to expire and became obsolete. The APK behavior raised enough flags for it to be considered dangerous or malware-like by some security solutions. Luckily, we grabbed it. Imagine what would happen if a threat actor would beat us to it: millions of devices at the mercy of an attacker.

Exactly how many millions are we talking about? Now that we can read the payload, we can leverage that knowledge to count a more distinguishable characteristic than IP addresses. One that seemed obvious to uniquely identify a device is the Android ID, used by the APK and sent over in all requests. In a 15 day sample, we saw over 11 Million unique Android IDs. That is more than the population of a small country, like Portugal for example.

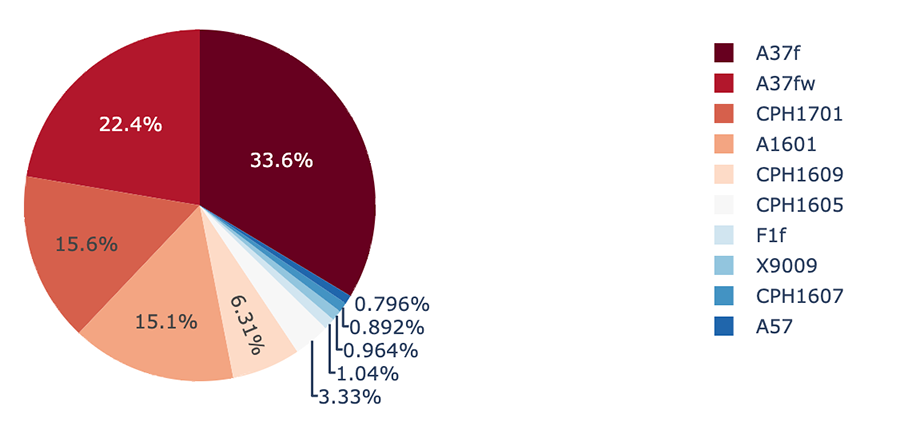

From our assessment of the sample, it seems to overwhelmingly affect smartphones from the OPPO brand running ColorOS 3. ColorOS is a user interface created by Oppo based on the Android Open Source Project. The first version was launched in September 2013. Oppo had released many Android based smartphones before, but did not label them as ColorOS. Below are the top ten affected models. They seem to be all relatively old models.

Going down the rabbit hole

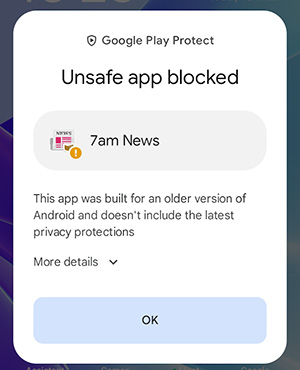

We talked about one APK, what about the others? Out of the four APKs we could dig up in VirusTotal, 3 were this OEM like, background running application and another is a bit different. It is called 7AM NEWS.

“ 7AM news deliver a[SIC] personalized headline stories, news and web content with the latest big data technologies.

- Penalization with minimum efforts[SIC]. The "intelligent mailman" serves you based on your data and usage.The more you use, the smarter it gets.

- Optimizing for challenging Internet environments.

- Reader Mode provides a clean and delightful reading experience We are trying to make a delightful experience for everyone.

- Contents we currently of[SIC]: Indian news, Indonesia news, Thailand news, Philippine news, Malaysia news, Vietnam news and Kenya news.”

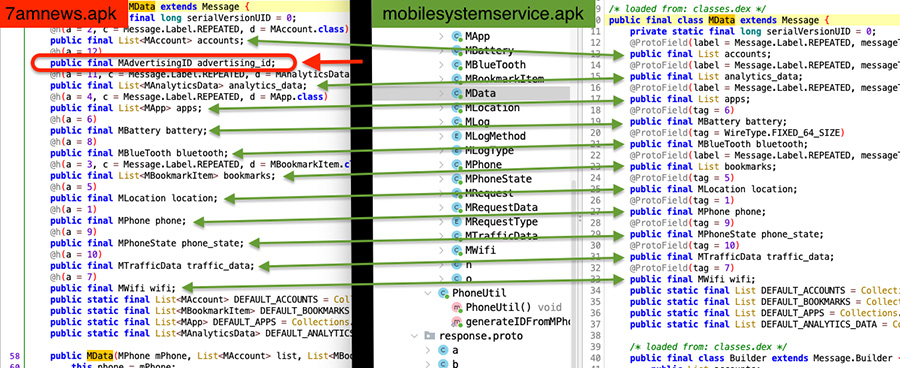

This is interesting, since these are the geographies we can see in our telemetry data that have significant traffic. But this is a very old application, and its supporting website isn’t even online anymore. Could it be possible to be this outdated news application that sends the telemetry data back to our sinkhole? That is a hypothesis, but looking more carefully at the decompiled code of the OEM APK and the 7AM News APK, we notice a subtle difference: the 7AM News app (on the left) has a protobuf field called advertising_id, which the OEM APK (on the right) has not.

As it turns out, we have no observation of that advertising_id field on our sinkhole logs, so we are assuming connections are initiated most likely from the OEM APK.

The purpose of it all

Ok, we know this application lives on millions of devices, we are not exactly sure how it ended up there nor how it was being used. What other information can we gather to fully understand what is going on?

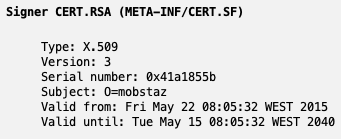

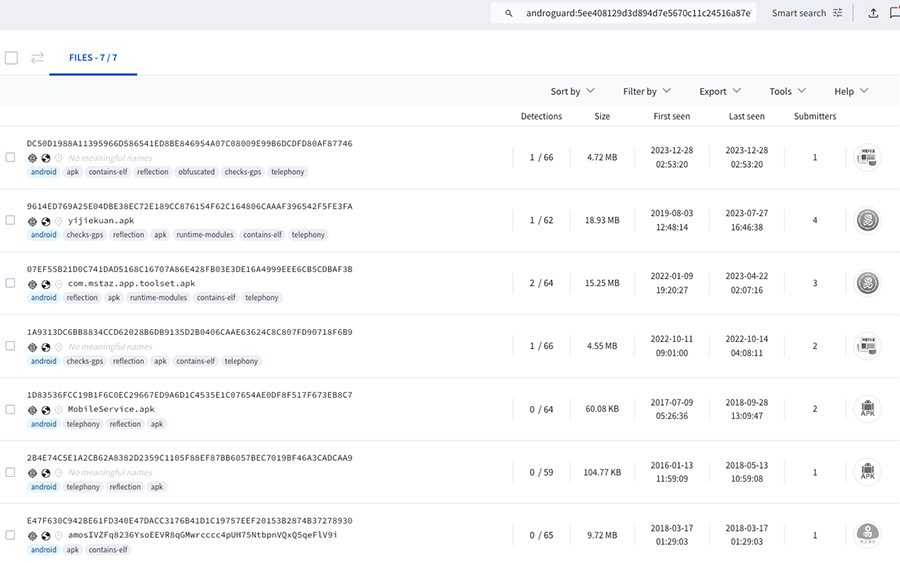

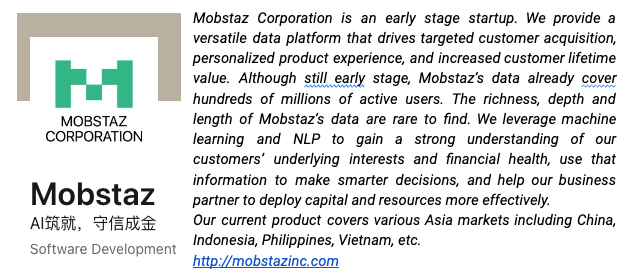

Well, the APK is signed by a certificate valid from May 22, 2015, valid for 25 years, and the signer organization name is: mobstaz.

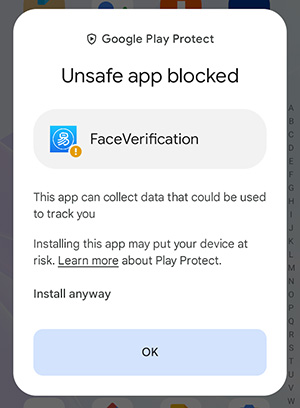

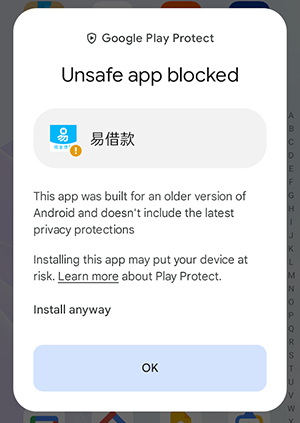

Yes... mobstaz. A creative name that, once again, just goes to show how reality can be stranger than fiction. A search for the signer's fingerprint in VirusTotal yields 7 other APKs, that is, applications that were signed by the same certificate holder. A couple were the 7AM news app, another 2 were an app called FaceVerification and another one seems to be some sort of Credit card banking app(?), besides the OEM ones.

Perhaps unsurprisingly, Google Play Protect marks all of the above apps as unsafe if we try to install them, except for the ‘OEM’ ones.

|

|

|

It seems all of those apps, in some form or the other, gathered telemetry about its users for further processing. The actual purpose we may never come to know, but we can try. Further digging the applications behavior and used URL endpoints hint that these apps are connected to a software development company called MOBSTAZ CORPORATION. On LinkedIn, they claim to be an early stage startup:

The website is down. The redirect, mstaz[.]com, is also down. If we fire up archive.org, we can ‘go back in time’ to try to figure out more information about the company:

Unfortunately, Mozhi Technology doesn’t seem to exist anymore either, and their website just shows a brief statement.

Regardless, the gathered information allows us to paint a picture of what the company was doing and why it collected data at scale from as many users as it could. At some point in time and during a period, they seem to be able to ship an OEM application for this intent. The objective seemed to be a highly targeted, highly personalized intelligence platform.

Why did they stop operating or what happened we don’t exactly know. We do know that, as with many startups, they seemed to go defunct. And their infrastructure was left lingering, running as background noise on the Internet. Until we picked it up.

This happens all the time. It is not an isolated incident. It will continue to happen and, most likely, more often. Everytime it does, a timer starts to see who gains control of the obsolete resources. And although the dangers are evident, who will win those resources and for what purposes they will be used is not clear at all.

Conclusions

Mobile applications have become an integral part of our daily lives, providing us with a wide range of functionalities such as communication, entertainment, shopping, banking, and much more. As these apps store more sensitive information like personal data, financial details, and login credentials, their security has become increasingly important. Users are now increasingly relying on their mobile devices for sensitive information and critical services, so it is essential that these apps are secure from cyber attacks during their lifetime. But what happens when their lifetime runs out?

The rapid obsolescence of mobile devices and applications can turn out to be catastrophic for users as in the case study we presented. This was just one example we’ve chosen to demonstrate the problem. Unfortunately it is not, by far, an isolated one. The impact can range from stolen credentials that can be used in credential stuffing attacks to personal information stealing, user monitoring to rogue application installation, it really only depends on the targeted application that becomes vulnerable.

Recommendations

Generally speaking, there are some rules of thumb we can follow to increase our overall security when it comes to mobile devices and software:

- Keep the device and applications up to date. This will ensure the latest security patches and features are in place.

- Enable automatic updates, when possible. This can be done usually via the app store or inside the applications.

- Don’t install apps from untrusted sources. It is usually not a good idea to do so and not a good indicator of trust for the publisher of the software.

- Do regular maintenance. Check your device for old apps, if you are not using them anymore just uninstall them. You gain space and decrease your security risk.

- Lastly, if the device reaches the end of life and it is not receiving security updates anymore, you should consider changing to a new one.

As for the discussed use case, if you are wondering if your device has the outdated application installed, it is not straightforward to check. If you are comfortable with the command line and running adb, you can check if the offending package is installed by running the command:

$ adb shell dumpsys package com.mobilesystemservice.android

If the above command outputs data about the package being installed, you can remove it by issuing:

$ adb uninstall com.mobilesystemservice.android

In case the command line is not for you, there are apps from the Play Store that specialize in uninstalling other apps and bloatware. We don’t recommend any of them in particular. You can try to find and remove the component this way, but it is not guaranteed that you will succeed. If you do try, don’t forget you just installed another application on your smartphone…

For those with access to our platform, this data is available and visible within the Insecure Systems risk vector for organizations for which we’ve observed affected devices on their networks.

Acknowledgements

Special thanks to Pedro Camelo for his invaluable help and continuous support throughout this research.